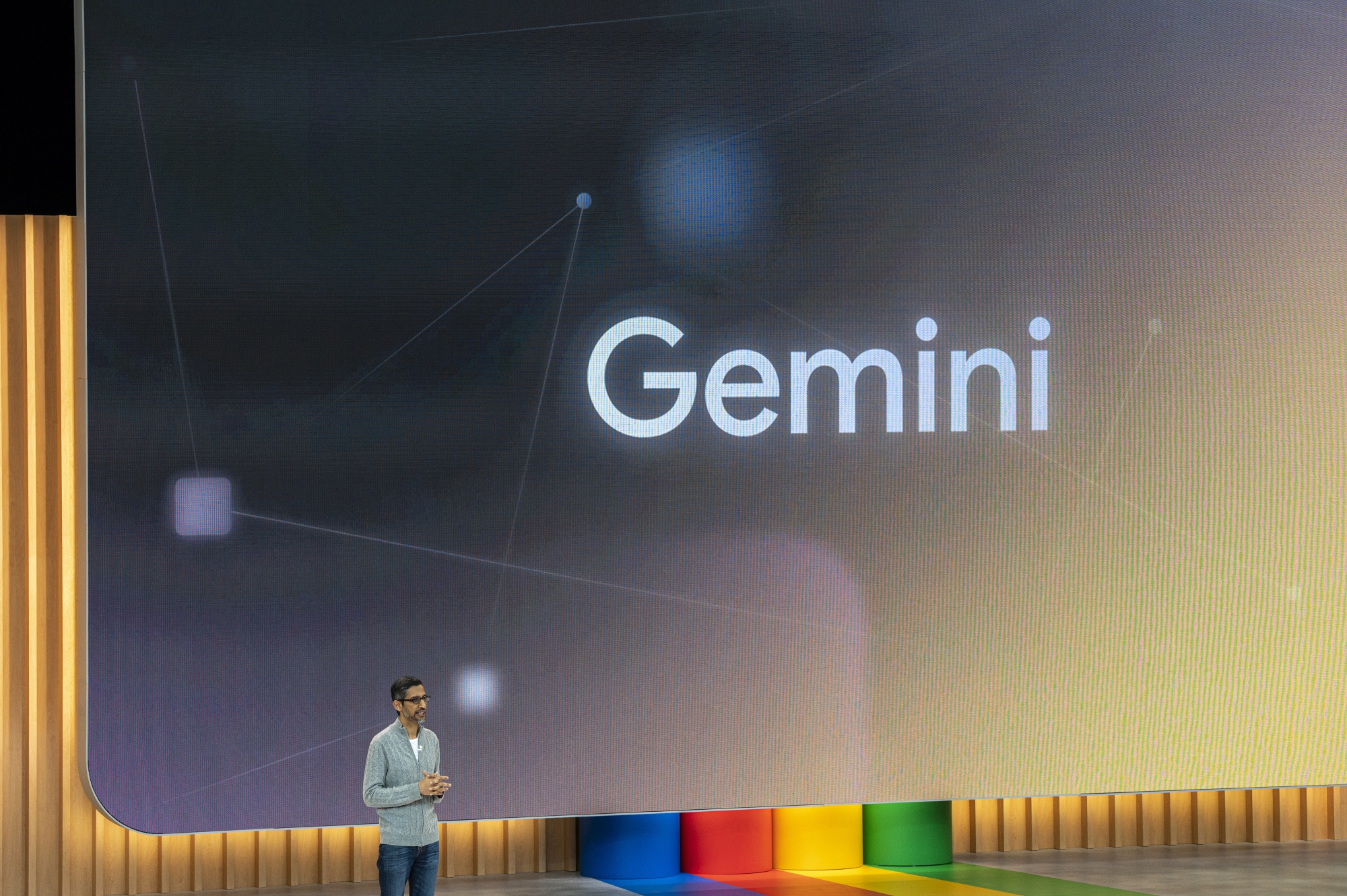

Google is facing scrutiny over its recently launched artificial intelligence model, Gemini, as the demonstration video created for its unveiling has come under question. The six-minute video showcased Gemini’s capabilities, including spoken conversations and visual recognition. However, the video did not explicitly mention that the demo was not conducted in real-time, and instead, it used still images and text prompts for Gemini’s responses. The company added a disclaimer in the YouTube description but did not include it in the video itself.

The demonstration video, which aimed to illustrate Gemini’s potential, faced criticism for not providing an accurate representation of its capabilities. Google later confirmed to Bloomberg that the demo did not occur in real-time, raising concerns about the transparency of the presentation. The company emphasized that the video was an “illustrative depiction” based on real multimodal prompts and outputs from testing.

This incident has drawn parallels to an earlier controversy Google faced in the year regarding a demonstration of its AI chatbots. During that episode, Google received criticism for what its employees referred to as a “rushed, botched” demonstration. The timing of this controversy coincided with Microsoft’s planned showcase of its Bing integration with ChatGPT.

The Information reported this month that Google abandoned plans for in-person events to launch Gemini, opting for a virtual launch instead. Google is currently in intense competition with Microsoft-backed OpenAI’s GPT-4, which has been a leading model in the AI space. In an attempt to assert its model’s superiority, Google released a white paper claiming that Gemini’s most powerful model, “Ultra,” outperformed GPT-4 in various benchmarks, albeit with incremental improvements.

The controversy surrounding the demonstration video underscores the importance of transparency in showcasing AI capabilities. As the AI industry advances and competition intensifies, companies are under increasing scrutiny to provide accurate representations of their models’ capabilities and performance. The incident has also sparked discussions about the need for ethical considerations and responsible practices in presenting and marketing AI technologies to the public.