April 24, 2024

April 24, 2024

Copyright 2023, IT Voice Media Pvt. Ltd.

All Rights Reserved

By Nikhil Taneja Managing Director-India, SAARC & Middle East

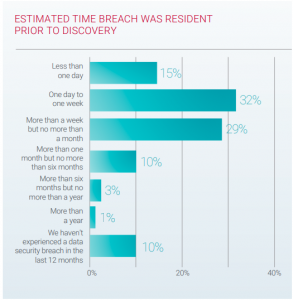

By Nikhil Taneja Managing Director-India, SAARC & Middle East Ninety percent of organizations that experienced a breach in the past 12 months believed that dwell time in their networks was one month or less. This time span is much shorter than what two recent large empirical studies determined was the average time that breaches were resident in networks before discovery.

Ninety percent of organizations that experienced a breach in the past 12 months believed that dwell time in their networks was one month or less. This time span is much shorter than what two recent large empirical studies determined was the average time that breaches were resident in networks before discovery. Attacks Still Find a Way

Attacks Still Find a Way API attacks: Access violations, which are the misuse of credentials, and denial of service (DoS) are the most common daily API attacks reported in the survey. Other threats included injections, data leakage, element attribute manipulations, irregular JSON/XML expressions, protocol attacks and Brute Force. Gartner predicts that, by 2022, API abuses will move from an infrequent to the most frequent attack vector, resulting in data breaches for enterprise web applications.

API attacks: Access violations, which are the misuse of credentials, and denial of service (DoS) are the most common daily API attacks reported in the survey. Other threats included injections, data leakage, element attribute manipulations, irregular JSON/XML expressions, protocol attacks and Brute Force. Gartner predicts that, by 2022, API abuses will move from an infrequent to the most frequent attack vector, resulting in data breaches for enterprise web applications. Application Denial-of-Service attacks: Twenty percent of organizations experienced DoS attacks on their application services every day. Buffer overflow was the most common attack type.

Application Denial-of-Service attacks: Twenty percent of organizations experienced DoS attacks on their application services every day. Buffer overflow was the most common attack type.