Just shifted to a smart home full of automated gadgets – from light switches to appliances to heating systems – but do not know how to activate and programme them to listen to your command? Read on.

A group of computer science researchers in the US may have found a workable programming solution for you.

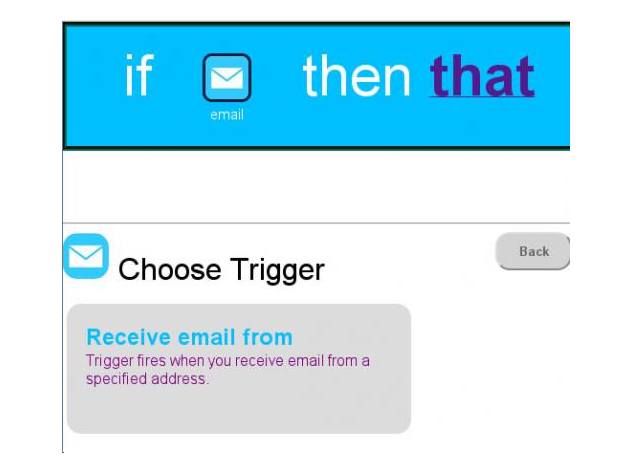

Through a series of surveys and experiments, the researchers show that a style of programming they term “trigger-action programming” provides a powerful and intuitive means of talking to smart home gadgets.

“We live in a world now that is populated by machines that are supposed to make our lives easier, but we cannot talk to them. Everybody out there should be able to tell their machines what to do,” said Michael Littman, a professor of computer science at Brown University.

The trigger-action paradigm is already gaining steam on the Web. It helps people automate tasks across various Internet services.

During the study, the researchers started by asking workers on ‘Mechanical Turk’ – Amazon’s crowdsourcing marketplace – what they might want a hypothetical smart home to do.

Then the team evaluated answers from 318 respondents to see if those activities would require some kind of programming, and if so, whether the programme could be expressed as triggers and actions.

Most of the programming tasks fit nicely into the trigger-action format, the survey found. Seventy-eight percent of responses could be expressed as a single trigger and a single action.

Another 22 percent involved some combination of multiple triggers or multiple actions.

Taken together, the researchers say, the results suggest that trigger-action programming is flexible enough to do what people want a smart home to do, and simple enough that non-programmers can use it.

People are more than ready to have some form of finer control of their devices.

“You just need to give them a tool that allows them to operate those devices in an intuitive way,” said Blase Ur, a graduate student at Carnegie Mellon University.

The research was presented at the Conference on Human Factors in Computing Systems (‘CHI2014’) in Toronto Monday.