2 mins read

A Peek Inside Google’s Efforts To Create A General-Purpose Robot

April 19, 2024

Copyright 2023, IT Voice Media Pvt. Ltd.

All Rights Reserved

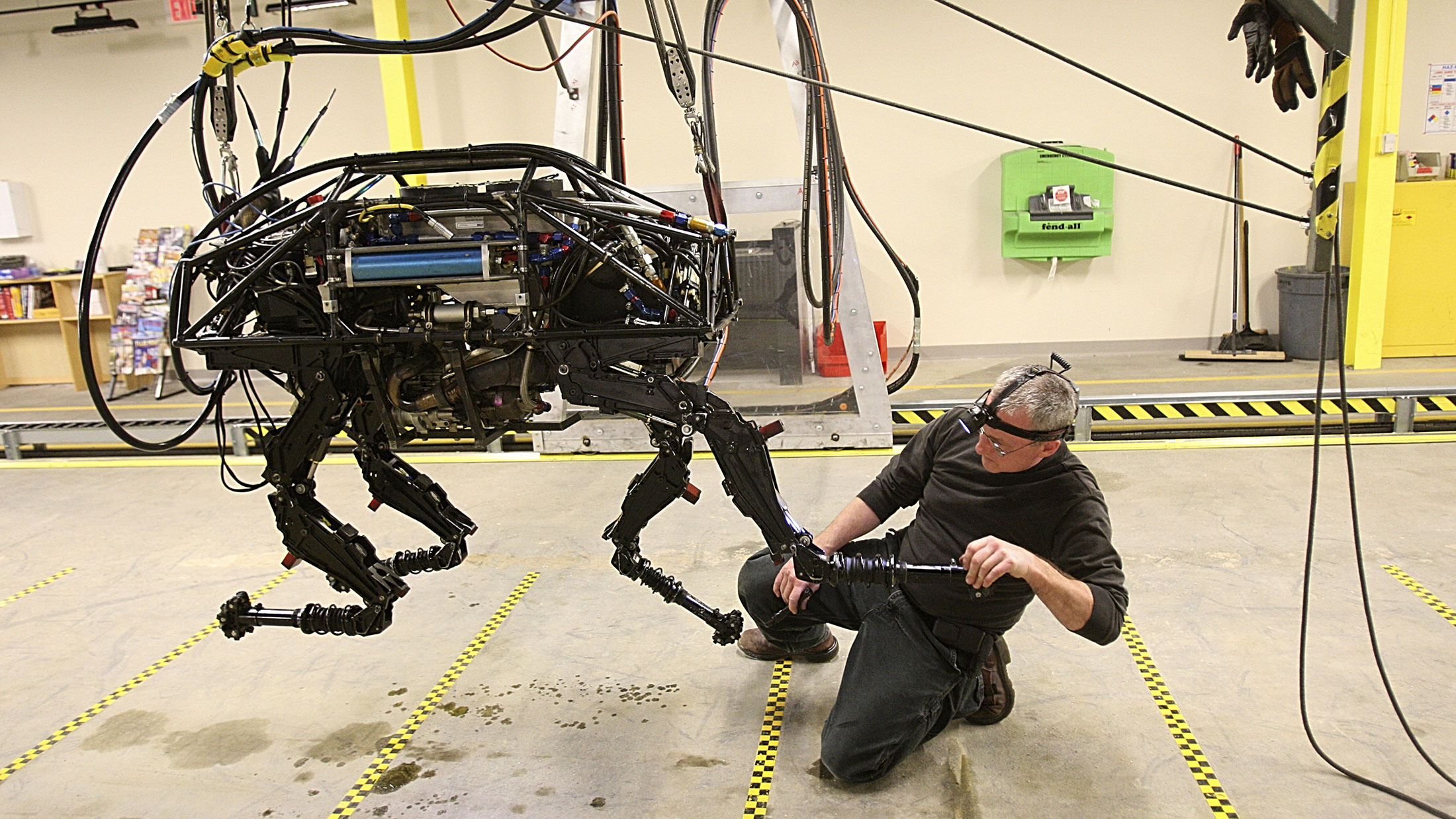

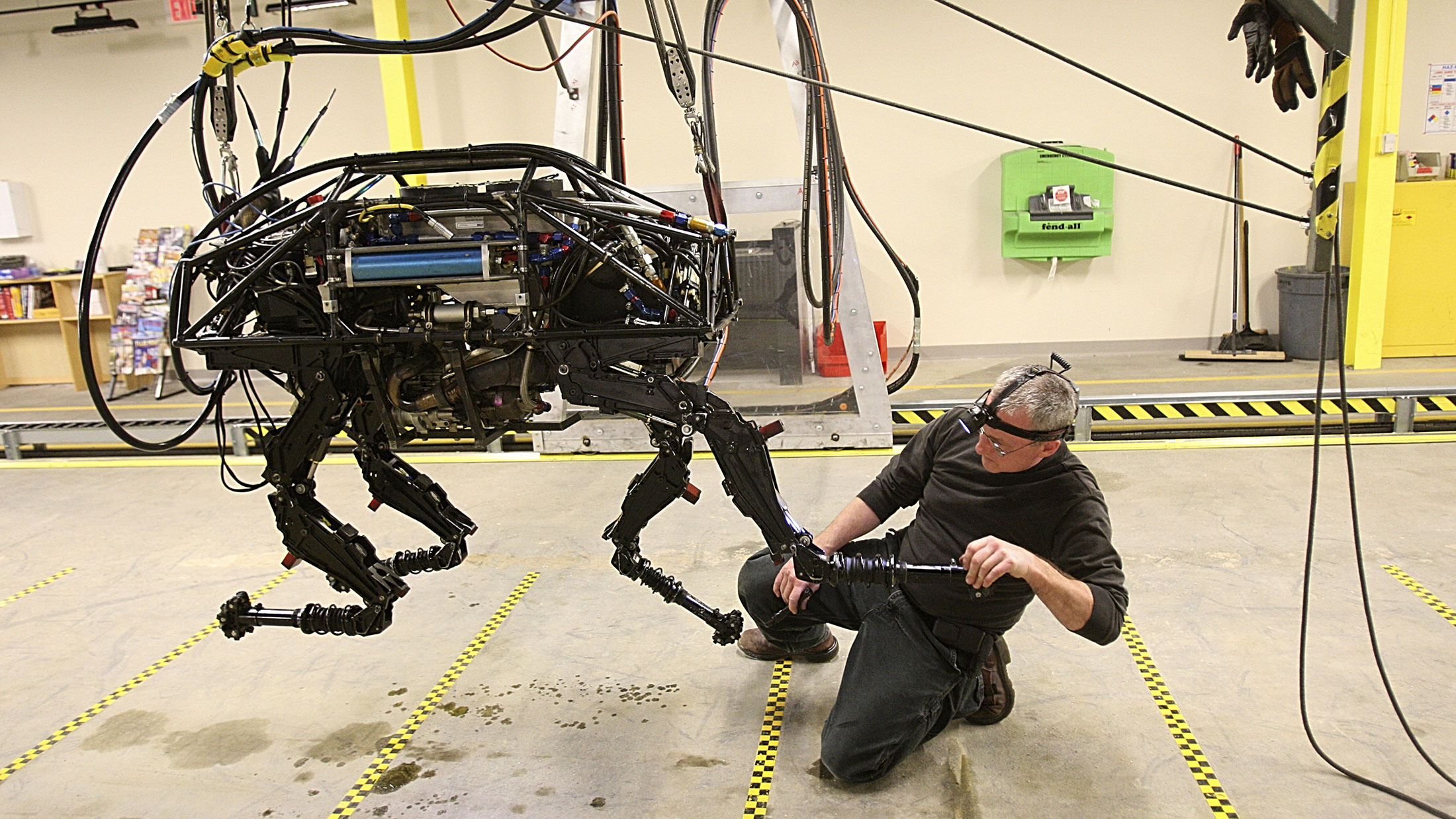

sci-fi movies, have been viewed more than 90 million times online. Despite that YouTube fame, Google is reluctant to discuss its robotics operation publicly. Behind the scenes, the company is laying the groundwork for intelligent, multipurpose robots, according to interviews with people familiar with the projects and research published in academic repositories.

sci-fi movies, have been viewed more than 90 million times online. Despite that YouTube fame, Google is reluctant to discuss its robotics operation publicly. Behind the scenes, the company is laying the groundwork for intelligent, multipurpose robots, according to interviews with people familiar with the projects and research published in academic repositories.